The Zurich Frontend Conference is a wonderful event focused on design and technology in Zurich. The emphasis is on visual and web design but often topics like backbone.js, responsive web, typography, and user experience easily come up in conversations and associated presentations. I personally have learned a lot about UX and UI design by interacting with groups like the UX Book Club and UX Chuchi meetups in Zurich. And presenting at the Frontend Conference was a great way to bring together our learnings from user experience (UX) and wearable computing.

The Rise of IoT

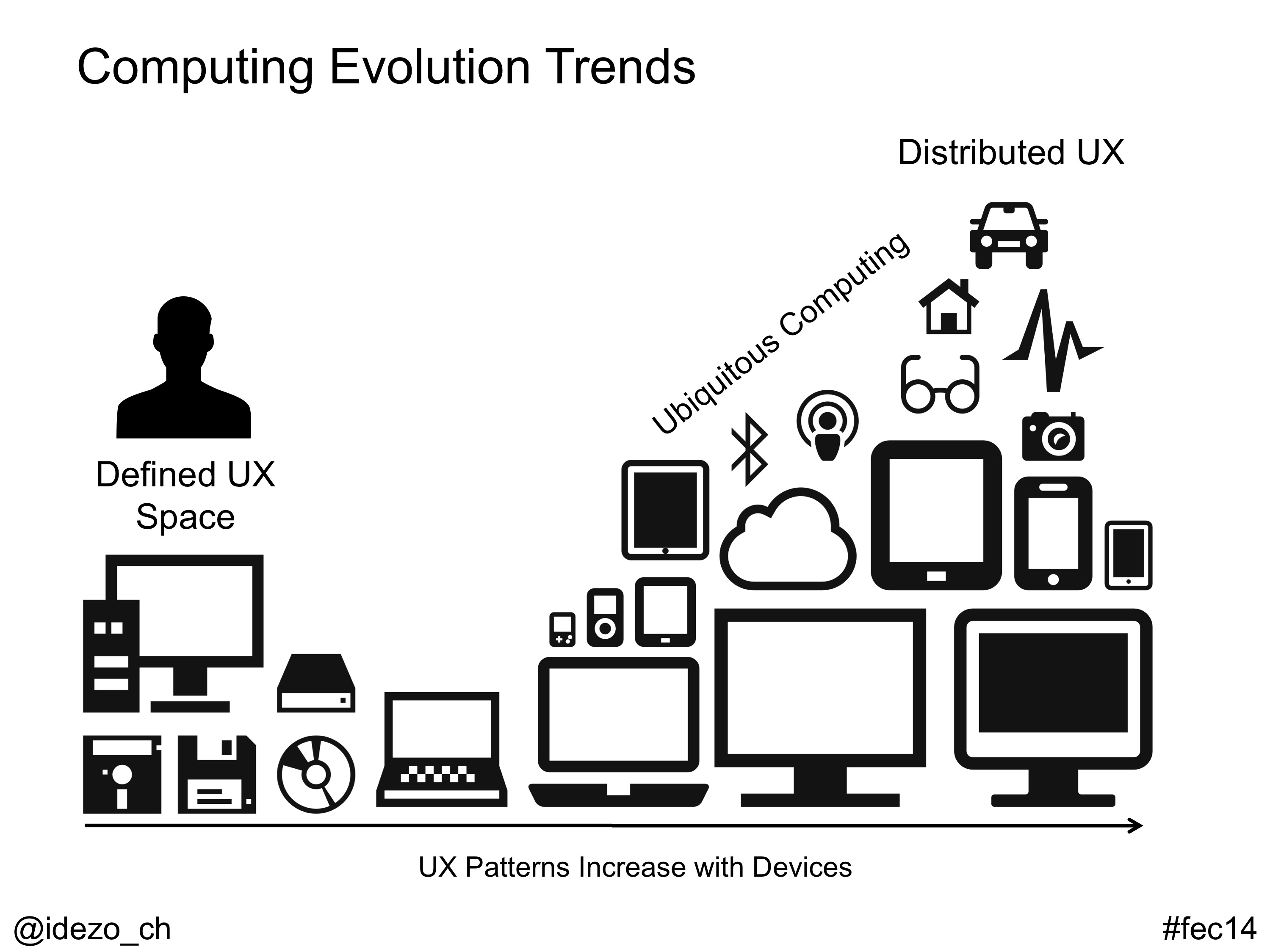

Wearable computing can be loosely characterized as putting sensors on the body and using the associated data in application development in some way. Wearables are really a subset of the current technology trend towards ubiquitous computing and the Internet of Things (IoT), where everything from your car to your watch, couch, refrigerator, pencil, tablet, shoes, glasses, and probably cat (via a smart collar) can talk and interact with one another. In other words, computers everywhere. This trend is driven by a reduction in the cost and physical size of sensors, processors, and memory, along with the cloud infrastructure and network connectivity to bring cohesion to everything.

Distributed UX

Distributed UX

A result of the trend towards ubiquitous computing is that there will be more devices to interact with. However, how do we know how to interact with which devices? Everyone knows what a laptop computer is, but only because we have been trained from an early age what it is. Ask someone what a laptop is and they will tell you it’s a portable computer. Naturally it has a screen and keyboard; the form and function of the product have been well-defined. However, this isn’t true for things like smart watches and glasses. Those product categories are still being tested in the market, seeing what society will accept. Now, what happens when we see a further explosion of devices? Before, we could design for one or two closed systems: a webpage on a computer screen, a mobile app on a smart phone. But in the future of connected devices, we will likely need to design for the ecosystem of connected products, rather than one or two discrete physical devices.

Quantified User

Quantified User

If you’re on the web everyday, you have a data profile. You may not know what it is or what it looks like, but companies which track you do. With wearables, we are now moving towards having quantified users. This means that all of your data and habits are tracked and quantified, in order to deliver products and experiences to you, sometimes without your knowledge of what exactly it is that you need. The Quantified User is just an extension of the Quantified Self movement, where people knowingly track a multitude of things like steps (with a Fitbit), blood sugar, heart rate, etc. The difference between the two is that the vast majority of people are too lazy to be their own data scientist. Even if you track all of these data sets about yourself, you still need to interpret the data and make decisions on how to improve your life. In the future, there will exist the option to let the apps do that for you.

Sensors

Sensors

Just as it is important for a UI designer to know the basics of CSS/HTML, and maybe some JavaScript, it’s a good thing if UX designers for wearable sensors know something about the physical sensors that are common in smart phones, watches, etc. I presented a bit about the sensor anatomy of smart devices, to give an awareness of how data from those sensors can be used to design applications. The near-future smart phones will include things like temperature cameras and 3D depth sensors (like the Microsoft Kinect), opening up new user cases, like stealing passwords from ATMs and banking systems or maybe assessing the emotional state of the person you’re speaking with. However, the best sensor designs are the ones which no one sees. For example, the Moticon insole tracks the reaction force on the feet as a person walks, allowing the data to be retrieved for medical research purposes in patients after having leg surgeries like hip or knee replacements. The user can gather tons of very valuable research data without ever interacting with that data profile of themselves.

UX for Health Products

UX for Health Products

As an example of bringing UX together with hardware, I presented patient empowerment from a user need and technology viewpoint. The rising sea of IoT and associated technologies easily give the impression of being a near impossible to navigate product landscape. However, in the end, technology is still just there for people, and if you focus first on the UX use case, then it’s just a question of prototyping and fitting the right technology to fill that user need. With prototyping products like the Arduino and add-on boards like the Cooking Hacks’ eHealth platform, it’s straightforward to define user needs with interaction design workflows and then implementing solutions in hardware prototypes. The full presentation can be viewed on SlideShare.